Analyze My Running Data on AWS - Part 2

Analyzing my running data on AWS

In the first post of this series, I took Garmin .fit files from about 7 years of running, converted them to .csv and put them into a MySQL database using AWS services like Lambda, SQS, and RDS.

I've gone ahead and created some tables in MySQL that do some aggregations around run statistics and heart rate data. I'll use these tables to pull in the data that I'll use to analyze my runs in this post and others.

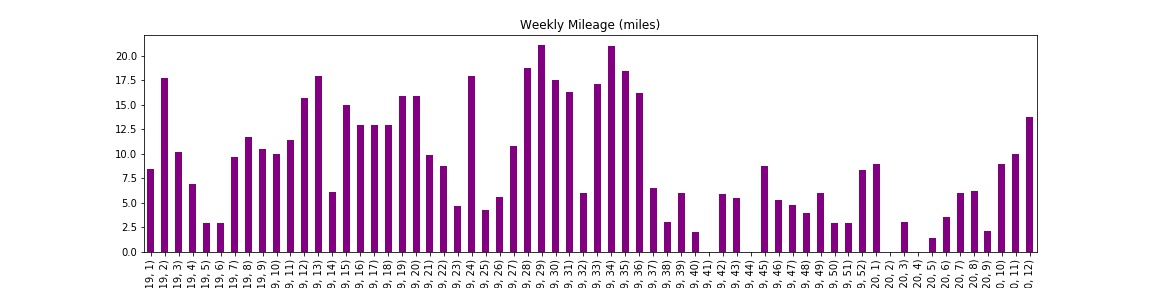

The first thing I want to know is what my weekly mileage has been from 2019 and beyond. To do this, I'm going to query my MySQL database from the run_statistics table. Then I'll merge that with a dataframe consisting of every date including and between the min/max of the run_statistics table so that I can include weeks where there weren't any runs.

Finally I'll graph the weekly milage to see how much I've been running.

import pandas as pd rs_query = 'SELECT * FROM garmin.run_statistics' rs_df = query(rs_query) rs_df['run_date'] = pd.to_datetime(rs_df['run_date']) rs_df.head()

Define a query function - I've left out secrets that I use in AWS.

import pymysql def query(q): _host = '' _port = 3306 _user = '' _pass = '' _database = '' conn = pymysql.connect(host = _host, port = _port, user = _user, password = _pass, db = _database) query = q df = pd.read_sql(query, conn) return df

Now execute the query to get the aggregated run statistics.

# Take the individual run statistics and merge them for missing days

# and then calculate the weekly statistics

def weekly_mileage(rs_df,dates_column='run_date',distance_column='distance'):

min_date = rs_df[dates_column].min()

max_date = rs_df[dates_column].max()

all_dates = pd.date_range(min_date,max_date)

dates_df = pd.DataFrame(all_dates,columns=[dates_column])

weekly_rs_df = pd.merge(left=dates_df,right=rs_df,how='outer',on='run_date',left_index=False,right_index=False)

weekly_rs_df['week'] = weekly_rs_df[dates_column].dt.week

weekly_rs_df['year'] = weekly_rs_df[dates_column].dt.year

weekly_gb_df = weekly_rs_df[weekly_rs_df['year'] >= 2019].groupby(['year','week'])

fig, axes = plt.subplots(figsize=(16,4))

weekly_summed = weekly_gb_df[distance_column].sum()/1609.34

weekly_summed.plot(kind='bar',color='purple')

axes.set_title('Weekly Mileage (miles)')

# upload information # fname = 'weekly_mileage.jpg'

bucket = ''

prefix = 'charts/'

plt.savefig(fname)

s3_client = boto3.client('s3')

s3_client.upload_file(fname, bucket, prefix+fname)

weekly_mileage(rs_df)